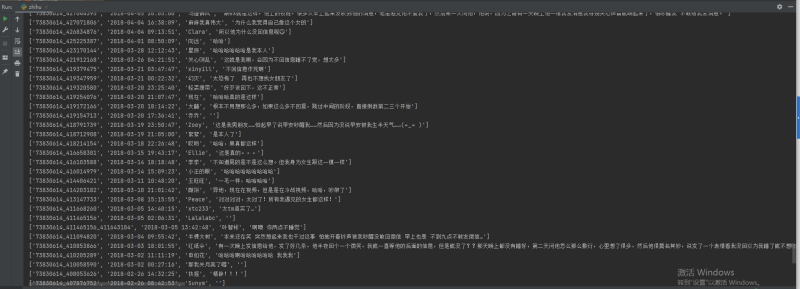

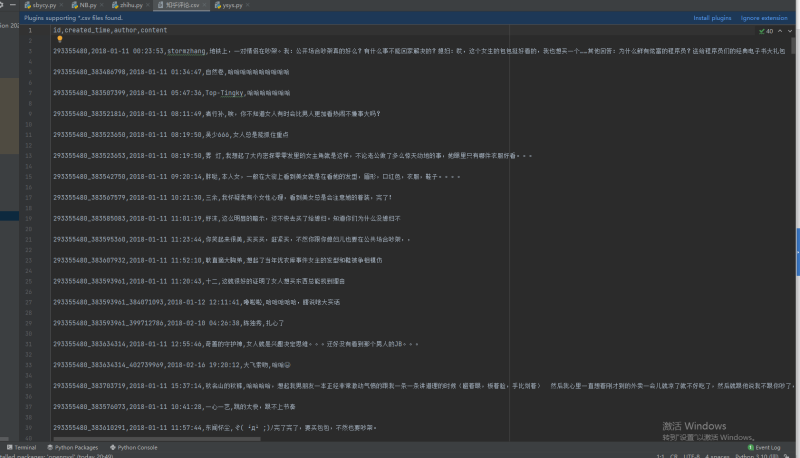

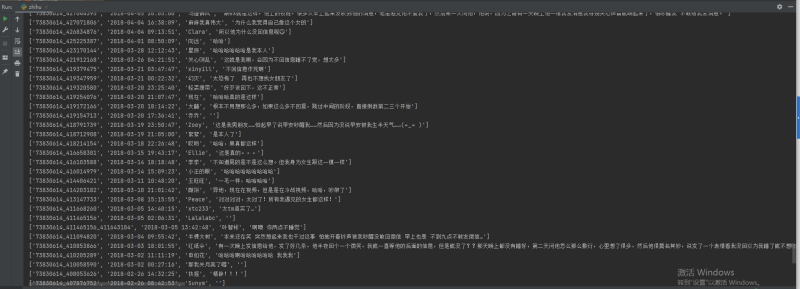

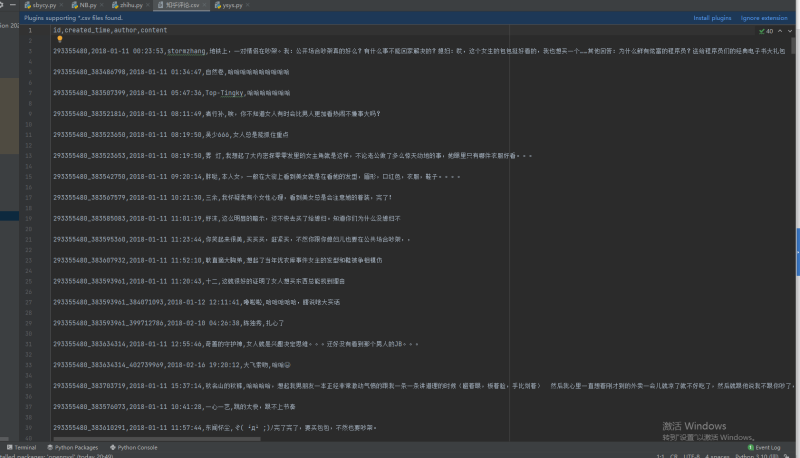

import csvfrom lxml import etreeimport requestsimport jsonimport time#输入头文件headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36',}#将获得的评论写入一个csv文件f1 = open('知乎评论.csv', 'w', encoding='utf-8')writer = csv.writer(f1)writer.writerow(['id', 'created_time', 'author', 'content'])def A(): i = 0 while True: #通过f12找到网址 url = 'https://www.zhihu.com/api/v4/questions/20270540/answers' \ '?include=data[*].is_normal,admin_closed_comment,reward_info,is_collapsed,annotation_action,' \ 'annotation_detail,collapse_reason,is_sticky,collapsed_by,suggest_edit,comment_count,can_comment,' \ 'content,editable_content,attachment,voteup_count,reshipment_settings,comment_permission,created_time,' \ 'updated_time,review_info,relevant_info,question,excerpt,is_labeled,paid_info,paid_info_content,relationship.is_authorized,is_author,' \ 'voting,is_thanked,is_nothelp,is_recognized;data[*].mark_infos[*].url;data[*].author.follower_count,vip_info,badge[*].topics;data[*].settings.table_of_content.enabled&limit=5&offset=5&platform=desktop' 'sort_by=default'.format(i)#format(i)用作换页 state=1 while state: try: res = requests.get(url, headers=headers) state=0 except: continue res.encoding = 'utf-8' #用json解析text js = json.loads(res.text) is_end = js['paging']['is_end']#筛选信息 for i in js['data']: l = list() id_1 = str(i['id']) l.append(id_1) l.append(time.strftime("%Y-%m-%d %H:%M:%S", time.localtime(i['created_time']))) l.append(i['author']['name']) l.append(''.join(etree.HTML(i['content']).xpath('//p//text()'))) writer.writerow(l) print(l) if i['admin_closed_comment'] == False and i['can_comment']['status'] and i['comment_count'] > 0: B(id_1) i += 5 print('打印到第{0}页'.format(int(i / 5))) if is_end: break time.sleep(2)def B(id_1): j = 0 while 1: url = 'https://www.zhihu.com/api/v4/answers/{0}/root_comments?order=normal&limit=20&offset={1}&status=open'.format(id_1, j) a=1 while a: try: res = requests.get(url, headers=headers) a=0 except: continue res.encoding = 'utf-8' jsC = json.loads(res.text) is_end = jsC['paging']['is_end'] for data in jsC['data']: l = list() ID_2 = str(id_1) + "_" + str(data['id']) l.append(ID_2) l.append(time.strftime("%Y-%m-%d %H:%M:%S", time.localtime(data['created_time']))) l.append(data['author']['member']['name']) l.append(''.join(etree.HTML(data['content']).xpath('//p//text()'))) writer.writerow(l) print(l) for J in data['child_comments']: l.clear() l.append(str(ID_2) + "_" + str(J['id'])) l.append(time.strftime("%Y-%m-%d %H:%M:%S", time.localtime(J['created_time']))) l.append(J['author']['member']['name']) l.append(''.join(etree.HTML(J['content']).xpath('//p//text()'))) writer.writerow(l) print(l) j += 20 if is_end: break time.sleep(2)A()f1.close()查看全文

声明:本文仅代表作者观点,不代表本站立场。如果侵犯到您的合法权益,请联系我们删除侵权资源!如果遇到资源链接失效,请您通过评论或工单的方式通知管理员。未经允许,不得转载,本站所有资源文章禁止商业使用运营!

下载安装【程序员客栈】APP

实时对接需求、及时收发消息、丰富的开放项目需求、随时随地查看项目状态

评论